The Hidden Risks of Third-Party AI

Artificial Intelligence (AI) continues to evolve, with new models seemingly being released every few months to push forward this new technology.

Businesses are increasingly looking at how they can integrate AI solutions as part of their digital transformation efforts to boost productivity, enhance efficiency and decision-making. However, with many businesses not yet implementing their own AI toolsets, employees are adopting the use of third-party AI tools into their workflows, introducing significant cyber security risks, particularly with the handling of sensitive business data.

But why are these a risk?

The Vulnerabilities of Third-Party AI Solutions

The biggest problem with using external AI solutions is that it often necessitates the sharing of business and other proprietary data beyond your secure environment.

This practice exposes businesses to potential data breaches and unauthorised access to proprietary data. OpenAI’s ChatGPT, for example, reserves the right to use personal information, usage data, and even the content that you provide to it to be able to train its models. That information and training data could then be potentially referenced by other people/businesses, exposing the data you provided it without your knowledge.

OpenAI do state that the individual's data is “anonymised,” however, industry experts refer to this as an “take everything now and sort it out later” approach.

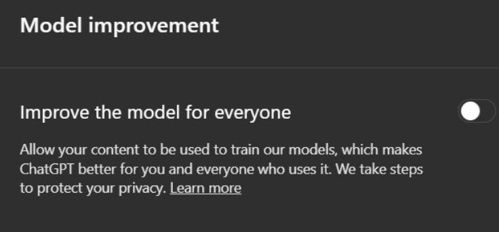

It is worth noting that you can turn off ChatGPT’s ability to train its model on your data, however, data collection is “On” by default.

In 2023, a glitch caused ChatGPT users even caused other users to be able to see other people’s replies and conversation histories.

Figures released in the 2025 UK Gov Cyber Breaches Survey revealed that approximately 50% of all UK businesses experienced at least one security breach or attack during 2024. This includes businesses that experienced a third-party attack, as well as those that experienced an internal breach (intentional or unintentional).

The financial implications associated with these types of breaches can be substantial. According to Gartner, the cost of a third-party cyber breach is typically 40% higher than that of an internal breach. Beyond the financial losses associated with a breach, businesses also face reputational damage with their customer & client base, as well as legal and regulatory repercussions.

Cyber Security Threats Specific to AI Integration

The usage of third-party AI tools that are not controlled or monitored by your IT or internal team can inadvertently become vectors for data breaches. Much like how unmonitored and uncontrolled Shadow IT can present its own cyber security implications, users sharing business data with third-party AI can present their own series of threats. Sharing sensitive business and customer/client data for analysis is likely to not only violate internal business policies, but also data privacy laws in line with GDPR and impact compliance with Cyber Essentials and ISO27001 regulations.

Additionally, many businesses are rushing to adopt “AI Agents” within their infrastructure without further assessing the risks that these may pose. AI Agents are designed to operate autonomously and can be granted access to and process data in ways that violate data privacy laws. An essential element that all businesses must first do when considering the implementation of any AI-based digital transformation tool is an AI readiness check. An AI readiness check ensures that all users have only access to the information and privileges they need to be able to accomplish their role effectively in line with the Policy of Least Privileged Access (PoLP) and often involves the implementation of Privileged Identity and Access Management solutions.

A report by Reuters highlights that AI agents implemented without these considerations in mind could be more susceptible to “prompt injection attacks,” where malicious instructions are embedded within or behind user inputs, leading to unintended actions across your business data and infrastructure. The autonomous nature of these agents means that without proper oversight and management, they might engage in activities that compromise your data security.

The Case for Internal AI Solutions

To mitigate these risks associated with third-party AI, businesses are now turning towards AI solutions that operate within their secure infrastructure and securely interact with data within their siloed business tenancy, ensuring that all user data access is in line with the Policy of Least Privileged Access.

Microsoft 365 Copilot, for example, exemplifies this approach, integrating generative and analytical AI capabilities directly into the Microsoft ecosystem to report on data that each individual user has permission to be able to access through Microsoft Graph. Ensuring that AI-generated responses are grounded in your business and user data context and access. Meaning that “John in Sales” cannot access or report on data that “Debbie in HR” has access to. This integration ensures that data remains within the business’s control, preventing external access, preventing the ability for models to train on this data, and adhering to existing privacy, security, & compliance commitments, including the GDPR, Cyber Essentials & ISO27001.

How can TwentyFour support your business’s AI adoption?

We understand that third-party AI solutions can offer innovative capabilities and are regularly being updated with new capabilities. However, they introduce significant cyber security risks, especially when it comes to your business and your customer/client data privacy & integrity.

Adopting internal AI tools that work within your business tenancy, such as Microsoft 365 Copilot, enables your business to harness the benefits of modern AI solutions, providing enhanced productivity and efficiency across your user base while maintaining control and security over your data.

By ensuring that the AI systems your employees use operate within secure, compliant environments, your business can mitigate risks and protect its most valuable asset: your data.

To take a free FREE AI Readiness check, book an appointment with us to find out more.